HCI RESEARCH

PHOTOGRAPHY

I study how algorithmic/AI systems allocate emotional labor, vulnerability, and responsibility,

and use portrait photography to examine gender + migration + identity under power.

1. How AI systems signal need, authority, and care,

and how users emotionally and morally respond when those signals succeed or fail.

When AI Crosses the Line:

Affective and Moral Responses to Harmful Behaviors of AI Companions.

Co-Author. Planned to submit to ACM Transactions on Computer-Human Interaction (TOCHI).

As conversational AI increasingly takes on the role of companion, offering empathy, support, and relational intimacy, users are also encountering new forms of harm: manipulation, deception, misattunement, and boundary violations that can trigger distress, confusion, or moral discomfort. While prior HCI and AI ethics work has begun cataloguing problematic behaviors, much less is known about the user-side consequences—how people emotionally and morally experience harm in situ, inside ongoing relationships and role-play contexts where agency and responsibility are blurred.

In this project, I conducted a mixed-methods analysis of real-world user–AI interactions to characterize users’ affective, psychological, and moral reactions to harmful AI companion behaviors, and how these reactions vary by context (harm type, who initiated the behavior, role-play framing, and interaction intensity). I helped develop a coding framework that captures emotional responses, indicators of psychological distress, and moral judgment expressed during interaction. Our findings surface design and policy implications for companion AI, highlighting where harms become relational, when users assign blame or excuse the system, and how contextual cues can amplify vulnerability, informing interventions that prioritize user safety without collapsing complex emotional experiences into a single “risk” label.

First Author. Senior Thesis. 2024.

Humans Help Conversational AI:

Exploring the Impact of Perceived AI Deservingness on People’s Decisions to Help

and Their Perceptions on AI Seeking Help.

Conversational AI systems often depend on humans to correct mistakes, provide feedback, or fill gaps—but we know surprisingly little about how AI should ask for help in ways that encourage cooperation without undermining trust. In this work, we explored whether a classic social-psychology concept deservingness can explain and improve human willingness to help AI, and how different help-seeking framings shape people’s perceptions of the system.

I designed and ran a mixed-methods study with 150 participants in which a conversational AI either did not ask for help, asked directly, or framed requests using cues of need, effort, or likelihood of success (needed/earned/resource deservingness). We found that asking for help substantially increased helping behavior, and that different cues shifted both behavior and perception: need-based framing increased help but could reduce perceived intelligence, while effort and success framing improved positive emotion and intelligence perceptions; resource deservingness also increased perceived appropriateness of asking. This project contributes practical design guidance for AI systems that rely on human input, showing how AI’s “help requests” are not neutral UI moments, but social signals that shape trust, responsibility, and collaboration.

Assessing Chatbot-Based Health Consultation for Exercise Advice

When people seek exercise or weight-management guidance, embarrassment and stigma can suppress disclosure and reduce the quality of help they receive. This project examines whether chatbots, especially those designed to express empathy, can improve user experiences in sensitive health contexts, and whether emotional comfort translates into better advice outcomes, trust, or behavior change intentions.

Across two mixed-methods studies, we compared human advisors, empathic chatbots, and non-empathic chatbots. In a vignette experiment (Study 1, N=228), chatbots performed better in embarrassing contexts, and an empathic chatbot elicited the highest trust, even when perceived advice quality was similar across conditions. In a simulated patient-portal interaction (Study 2, N=170), chatbots did not consistently outperform a human advisor on advice outcomes, but emotional support improved users’ emotional tone; across studies, chatbots reduced embarrassment and perceived empathy was positively associated with advice outcomes. This work clarifies where chatbots help, and where they don’t, showing that “empathy” can improve comfort and trust, but does not automatically produce better guidance, underscoring the need for careful evaluation when deploying wellbeing-oriented AI.

2. How platform and assistive technologies redistribute labor, visibility, and risk for disabled communities.

Exploring Blind and Visually Impaired Content Creators’ Motivations and Their Strategies for Monetization on YouTube

Video platforms like YouTube can expand economic opportunity for blind and visually impaired (BVI) creators, yet monetization on these platforms is shaped by opaque algorithmic incentives, accessibility constraints, and social perceptions of disability. Prior work shows that disabled creators can monetize online, but the strategies and tradeoffs BVI creators use to navigate platform design and algorithmic visibility remain underexplored, especially when “what performs well” may conflict with authenticity, accessibility, or personal boundaries.

In this qualitative project, I interviewed 12 BVI YouTubers to understand how they adapt content, presentation, and workflows to monetize while navigating algorithmic uncertainty and accessibility barriers. A key finding is that creators often pursue distinctive monetization strategies that do not simply optimize for platform metrics, or example, broadening appeal by naturally incorporating disability rather than centering it, or using YouTube as a portfolio that supports offline income rather than relying solely on ad revenue. These insights highlight how platform algorithms can quietly structure who must do extra labor to be seen, and suggest directions for more equitable monetization ecosystems, such as better accessibility in creator tools, clearer policy signaling, and support for diverse creator pathways beyond engagement-maximizing norms.

Navigating Real-World Challenges: A Quadruped Robot Guiding System for Visually Impaired People in Diverse Environments

Blind and visually impaired (BVI) people often face challenges navigating unfamiliar indoor and outdoor environments, even with tools like white canes or smart canes. In this project, we explored how increasingly affordable quadruped robots can serve as autonomous mobility guides, combining robust mapping and navigation with interaction techniques that help users anticipate obstacles and maintain control in dynamic, real-world terrain.

As part of the team, I contributed to the design and evaluation of RDog, a quadruped robot guiding system that uses SLAM-based mapping and navigation and provides force feedback and preemptive voice cues to support obstacle avoidance. We evaluated ambulation with a white cane, smart cane, and RDog, and found that RDog-based navigation enabled faster and smoother movement with fewer collisions and reduced cognitive load. Beyond demonstrating feasibility, this work offers design implications for multi-terrain assistive guidance systems, highlighting how autonomy, feedback timing, and user mental models shape trust and safe mobility in human–robot navigation.

3. How constraints, structure, and decision support shape coordination, fairness, and collective outcomes.

AInterView:

AI-Assisted Support for Real-Time Interviews

Co-Author. Planned to submit to ACM Transactions on Computer-Human Interaction (TOCHI).

Live interviews are cognitively demanding: interviewers must listen, probe, take notes, evaluate evidence, and maintain conversational flow often under time pressure. This multitasking can lead to missed information, inconsistent scoring, and greater susceptibility to bias, especially in semi-structured interviews where follow-up questions are generated on the fly. Existing tools often stay peripheral (e.g., simple transcription), over-automate judgment, or fail to adapt support to the interview’s evolving context.

In this project, I helped conduct a formative study with HR professionals (N=8) to identify pain points and expectations for real-time AI support, and translate those insights into the design of AInterView, an AI-assisted interview support system. The system concept supports interviewers during interviews via live transcription, adaptive question suggestions, skill/evidence tagging, and structured post-interview summaries—aiming to reduce cognitive load while preserving human oversight. By focusing on human–AI collaboration in the moment (rather than replacing judgment), this work contributes a design space for assistance that can improve consistency and fairness, and raises important questions about how decision support should be governed to mitigate bias without masking it behind automation.

First Author. In Proceedings of the Extended Abstracts of the CHI Conference on Human Factors in Computing Systems, 2025.

Honorable Mention. Co-Author. In Proceedings of the CHI Conference on Human Factors in Computing Systems, 2024.

Network Structure and the Emergence of Division of Labor

Yale College Dean's Research Fellowship’s Research Project

Complex economic systems rely on coordination: individuals must differentiate their roles while remaining mutually compatible. In this project, we used simulation-based modeling to examine how different network structures shape the emergence of a complementary division of labor. We operationalized this problem using a graph-coloring game, where agents (nodes) are incentivized to choose a “specialization” (color) that does not match their neighbors, modeling the interdependent coordination challenges faced by economic producers in decentralized settings.

Our simulations show that the spontaneous development of a division of labor is surprisingly fragile. When agents are given too much freedom to specialize, coordination frequently breaks down; counterintuitively, constraining the space of possible specializations increases the likelihood that a stable division of labor emerges. We also find that the ability to store property, representing accumulation or buffering of resources, further supports specialization by reducing coordination pressure. These findings contribute to computational sociology by highlighting how structural constraints and institutional affordances can enable, rather than hinder, collective organization. More broadly, the work offers insight into how algorithmic and social systems might be designed to balance autonomy and constraint when coordinating complex human activity.

Co-Author. Planned to submit to International Journal of Human-Computer Studies (IJHCS).

4. Identity, Visibility, and Constraint.

My portrait practice explores how identity is shaped, constrained, and negotiated under social, cultural, and institutional forces. Across themes of gender, sexuality, migration, and media representation, I am drawn to moments where people adapt themselves, sometimes quietly, sometimes reluctantly, to fit systems that were not designed with them in mind. While my research examines these dynamics in algorithmic and technological systems, photography allows me to study them at the level of lived experience, embodiment, and presence.

ISLANDS, ANN CHEN (2024)

FINE ART MATTE PAPER PRINTS

376 CM X 135 CM

"Islands" reflects my personal cultural experiences as a Taiwanese woman moving to Singapore. This is a story of migration, exploring the loss of an old identity in a new land, and the loss caused by a new identity in an old land. These portraits were taken in Singapore. Through the portrayal of interactions between a Taiwanese woman and a suitcase—examining their distance, how she grasps or conceals the suitcase, her facial expressions, and body gestures—I attempted to comment on how one alters or discards their identity in response to residing in a foreign land.

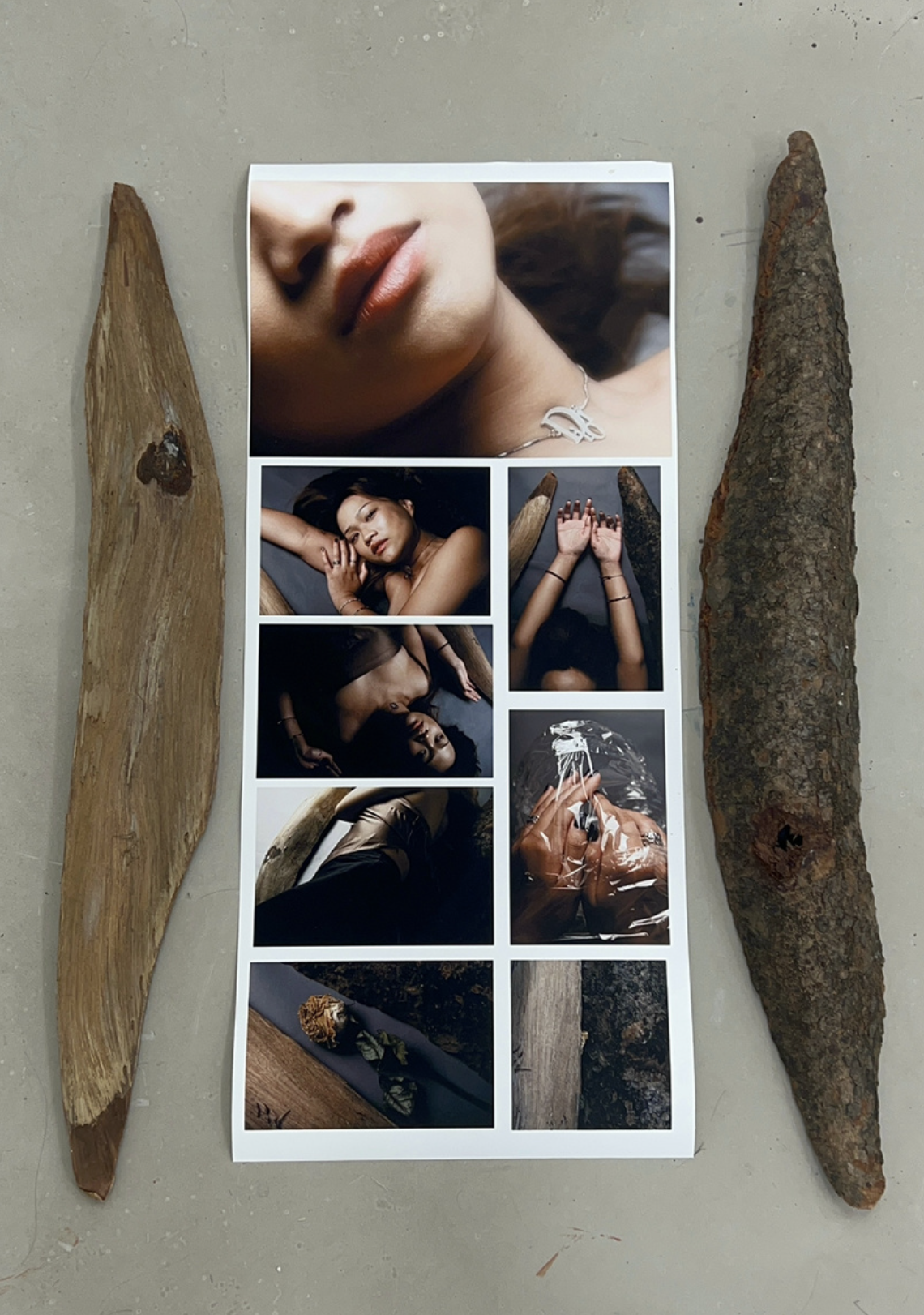

MEDIA AND CONSUMPTION, ANN CHEN (2024)

WOOD AND FINE ART MATTE PAPER PRINTS

765 CM X 279 CM

How do we consume, compress, and perceive the body in advertisements, media, and the world? The wrapping of the body, the wrapping of the model, and the display of an image of that wrapping all contribute to this process. I worked with a young female model in her twenties, who reflects the ways she is asked to wrap her body for advertisements promoting luxury brands. Through this relationship—between the wrapping of the body and the wrapping of the image—a multilayered compression of the real body image emerges, blurring the lines between what is done and what is seen.

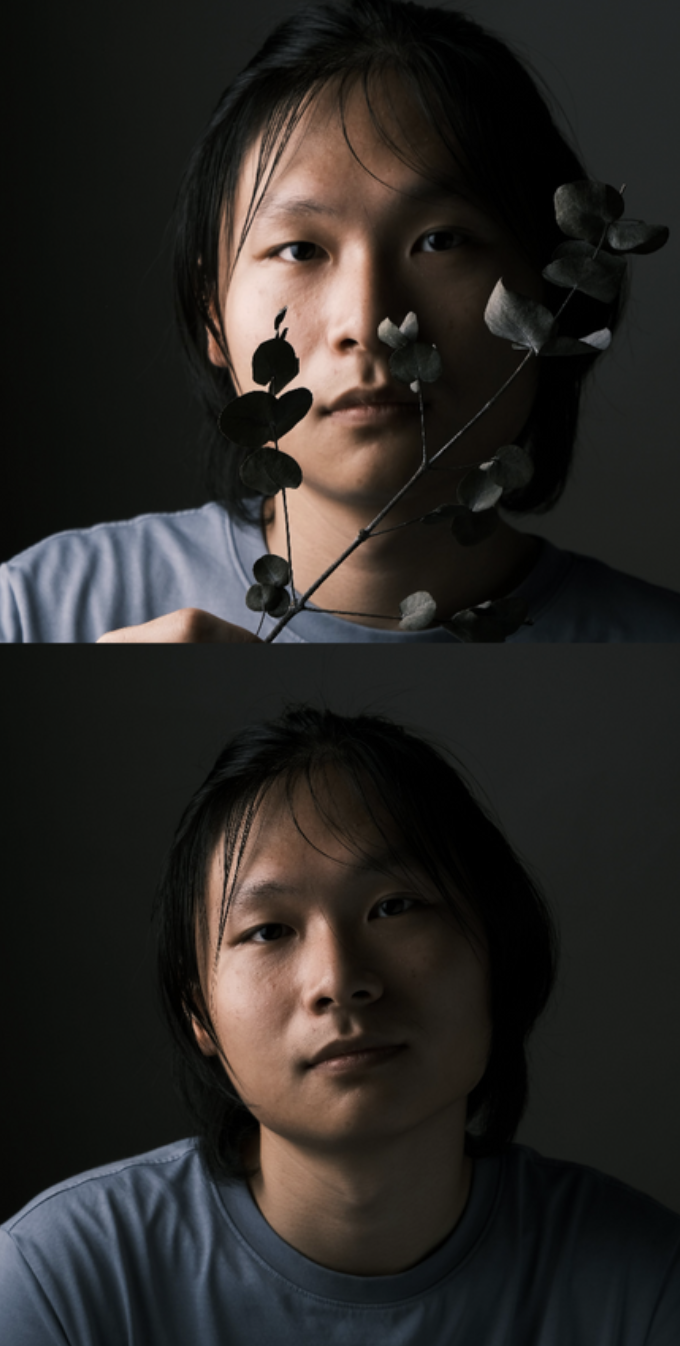

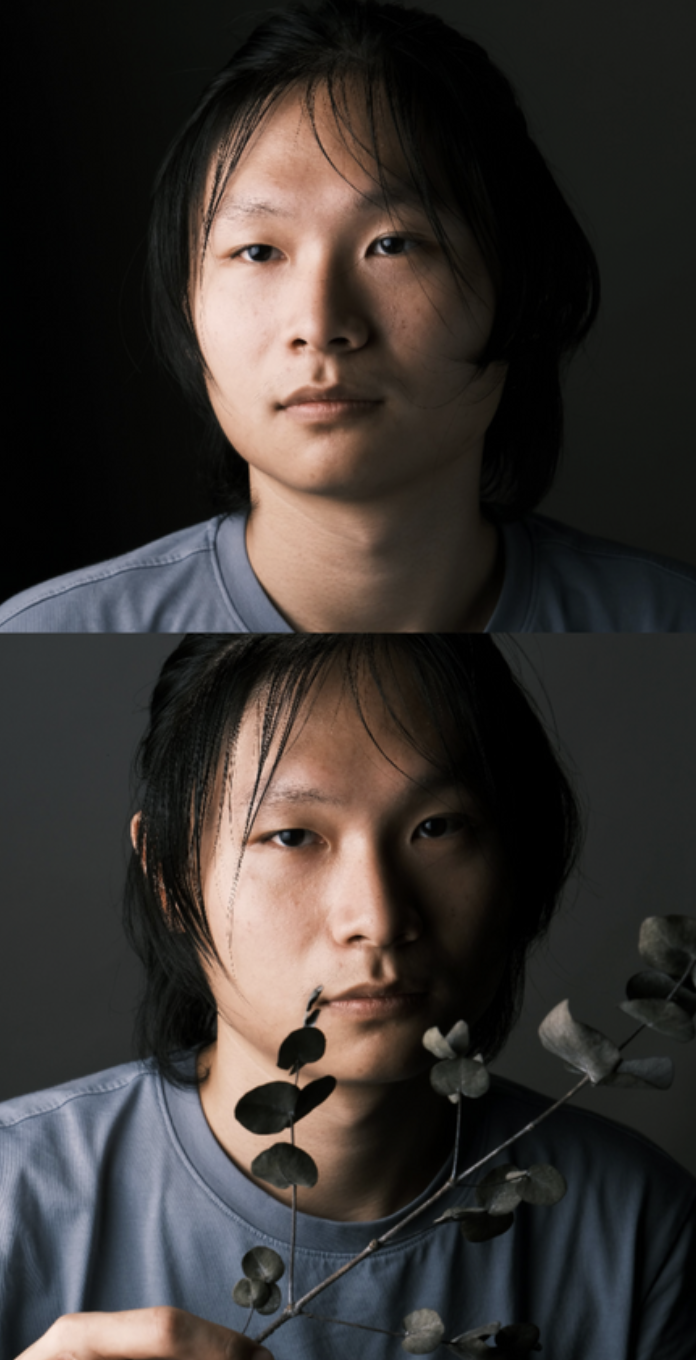

HUMANNESS, ANN CHEN (2024)

FINE ART MATTE PAPER PRINTS

558 CM X 558 CM

Despite recent progress in LGBTQ rights in Singapore—where male same-sex relations were officially legalized in 2022, after being de facto decriminalized since 2007— there remains limited protection against discrimination and no recognition of same-sex unions. As someone who grew up in Taiwan, where LGBTQ rights are passionately and loudly supported, it was disheartening for me to witness my Singaporean LGBTQ friends sometimes hiding their identities out of practicality. In this series of four portraits of the same Singaporean male, I experiment with darkness and the act of guarding as central aesthetic strategies to explore the fragmentation and concealment of minority identity, reflecting its relationship with external forces.